Introduction of a middleware for the Caddy web server to handle Edge Side Includes (ESI) tags. ESI tags are use to query backend (micro) services. This middleware supports Redis, Memcache, HTTP/HTTPS, HTTP2, shell scripts, SQL and gRPC (Protocol buffers).

Latest commit and statistics from GitHub:

ESI tags are used to fetch content from a backend resource and inject that content into the returned page to be displayed in a e.g. browser. ESI tags limits itself not to HTML only, you can include them into all text formats.

Warning: Project still in alpha phase as there is a terrible race condition.

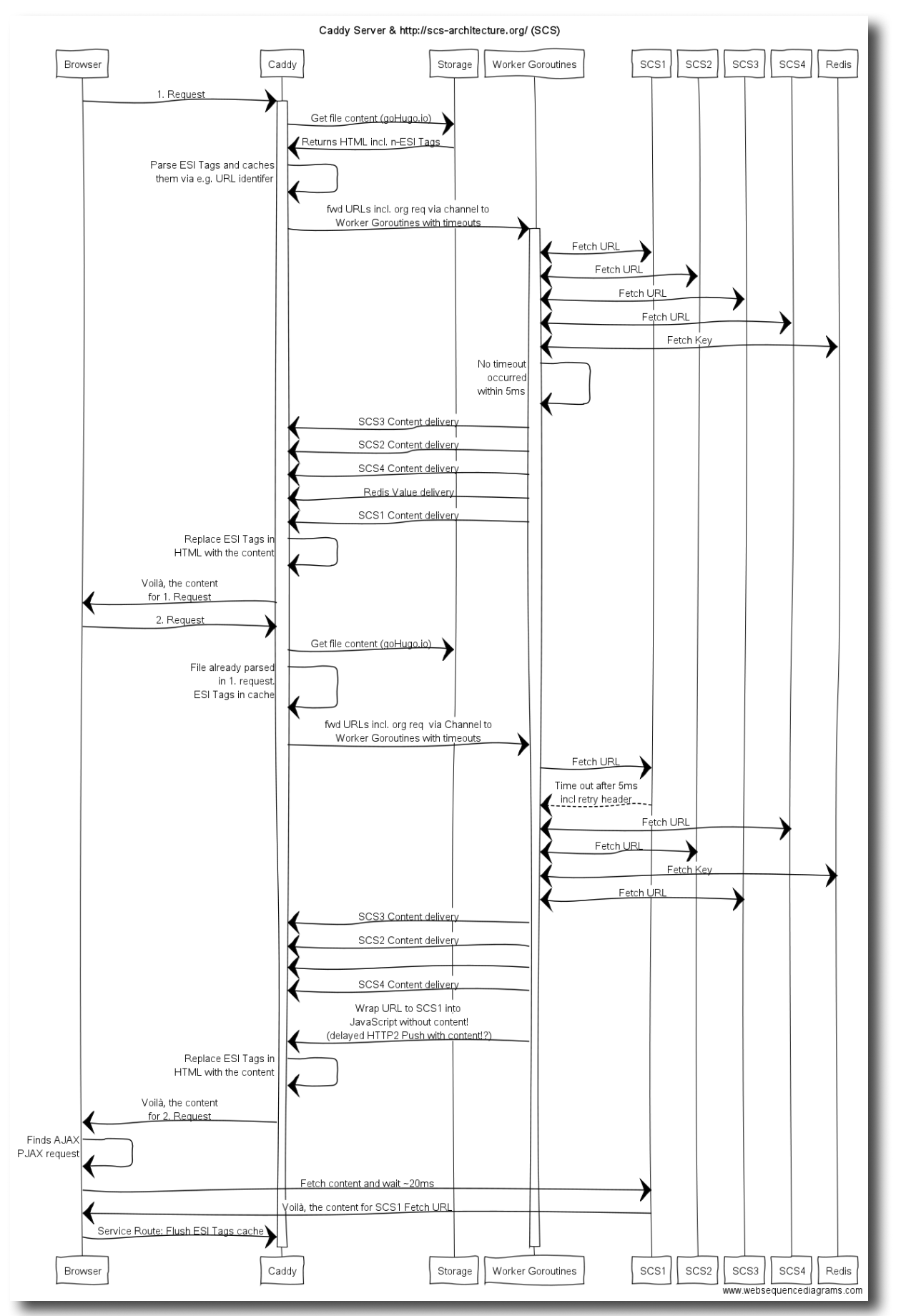

Architectural overview

As the above sequence diagram shows, the output of the initial byte starts only

then, when all data from the backend resources has been received. This enables

us to calculate the correct Content-Length header and also allows to return

headers from the backend to the client. So time to first byte "TTFB"

depends on the slowest backend resource.

Future versions of ESI for Caddy provides the additional option of enabling

Transfer-Encoding: chunked to start immediately the output and then waiting

for all backend resources when the first ESI tag occurs in the page.

Features

- Query data from HTTP, HTTPS, HTTP2, gRPC, Redis, Memcache and soon SQL and fCGI.

- Multiple incoming requests trigger only one single parsing of the ESI tags per page

- Querying multiple backend server parallel and concurrent.

- Coalesce multiple incoming requests into one single request to a backend server

- Forwarding and returning (todo) of HTTP headers from backend servers

- Query multiple backend servers sequentially as a fall back mechanism

- Query multiple backend servers parallel and use the first returned result and discard other responses, an obvious race condition. (todo)

- Support for NoSQL Server to query a key and simply display its value

- Variables support based on Server, Cookie, Header or GET/POST form parameters

- Error handling and fail over. Either display a text from a string or a static file content when a backend server is unavailable.

- If an HTTP/S backend request takes longer than the specified timeout, flip the source URL into an XHR request or an HTTP2 push. (todo)

- No full ESI support, if desired use a scripting language ;-)

More details how officially Edge Side Includes are defined.

This middleware implements only the <esi:include /> tag with different

features and interpretations.

Example

The following ESI tag has been implemented into this markdown document:

<esi_COLON_include src="grpcServerDemo" printdebug="1" key="session_{Fsession}"

forwardheaders="all" timeout="4ms" onerror="Demo gRPC server unavailable :-("/>

Short explanation for the tag above:

grpcServerDemois an alias stored in the resources file, which is not web accessible because it can contain sensitive data.printdebugprints duration, possible error messages and the parsed tag. You should only enable it during development.session_{Fsession}the uppercaseFdeclares that the variablesessioncan be found in the form, either GET or POST/PATCH/PUT.forwardheadersforwards all headers from your browser to the micro service. You can define specific headers only.timeoutif the gRPC service takes longer than those4msthe error message inonerrorgets displayed.

The content of the resources file looks:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<items>

<item>

<alias>grpcServerDemo</alias>

<url><![CDATA[grpc://127.0.0.1:42042]]></url>

</item>

</items>

The following form enables you to create different session counters:

Now open the Web Inspector panel and search for HTML comment of the printdebug

output. The value Next Session Integer gets reset after a specific short

amount of time.

Click here to discover the source for the gRPC server

Documentation

Please switch to the README.md in the GitHub source code.

JSON Example

Redis feature not enabled by default.

Lets say you want to output music stations as a JSON object. First we’re configuration the backend resource alias “myRedis”. The URL to the Redis server gets stored in the resource configuration file (see Caddyfile). Second we’re adding the ESI tags to the main JSON array, defining the src and each key points to a key in the Redis server. The value of each key contains a valid JSON object. Once the page gets requested from the e.g. browser the ESI middleware will query in parallel Redis and insert the value into the main JSON array. As Redis is single threaded you can define additional Redis sources to gain even more performance. Finally you only need to write a program to insert the keys and values into Redis.

[

<esi:include src="myRedis" key="station/best_of_80s" />,

<esi:include src="myRedis" key="station/herrmerktradio" />,

<esi:include src="myRedis" key="station/bluesclub" />,

<esi:include src="myRedis" key="station/jazzloft" />,

<esi:include src="myRedis" key="station/jahfari" />,

<esi:include src="myRedis" key="station/maximix" />,

<esi:include src="myRedis" key="station/ondalatina" />,

<esi:include src="myRedis" key="station/deepgroove" />,

<esi:include src="myRedis" key="station/germanyfm" />,

<esi:include src="myRedis" key="station/alternativeworld" />

]

Similar technologies

Varnish ESI Tags

Correct me if I’m wrong:

- No HTTP2 support

- No HTTPS support

- Sequential processing of all ESI tags

- Nearly full support of the ESI tag specification

Stack Overflow: Varnish and ESI, how is the performance?

ESI for Caddy solves this problem: Varnish ESI Streaming Response - Is it possible NOT to stream the response

ESI for Caddy solves this problem: ESchrade - Kevin Schroeder - MAGENTO, ESI, VARNISH AND PERFORMANCE

BigPipe

How it works

- During rendering, the personalized parts are turned into placeholders.

- By default, Drupal 8 uses the Single Flush strategy (aka “traditional”) for replacing the placeholders. i.e. we don’t send a response until we’ve replaced all placeholders.

- The BigPipe module introduces a new strategy, that allows us to flush the initial page first, and then stream the replacements for the placeholders.

- This results in hugely improved front-end/perceived performance (watch the 40-second screencast above).

Download Caddy server incl. ESI support

More details on https://github.com/corestoreio/caddy-esi

TODO ;-)

Cyrill Schumacher's Blog

Cyrill Schumacher's Blog